Elite Context Generation

for Autonomous LLMs

Nexora Context is an MCP-accessible context agent that unifies your open & closed-source data. Your LLM asks for context → we search files, APIs & the web → your LLM answers with confidence.

Everything your LLM needs to know

Plug in files, drives, databases, SaaS tools and the public web. Nexora Context handles permissions, search and synthesis—the LLM stays focused on reasoning.

Bring your data

Sync docs, PDFs, wikis, code, tickets, emails & more. Incremental, streaming ingestion.

MCP-native tools

Expose retrieval & summarize tools to any MCP-compatible LLM or agent framework.

Granular access

Row- and field-level controls, scoped API keys, audit trails & redaction.

Augmented web

Headless browser & SERP adapters with safety & source attribution.

Knowledge graphs

Automatically map relationships between entities, concepts, and documents for deeper contextual understanding.

Enterprise-grade

SOC2-in-progress, encryption-at-rest, SSO, org workspaces.

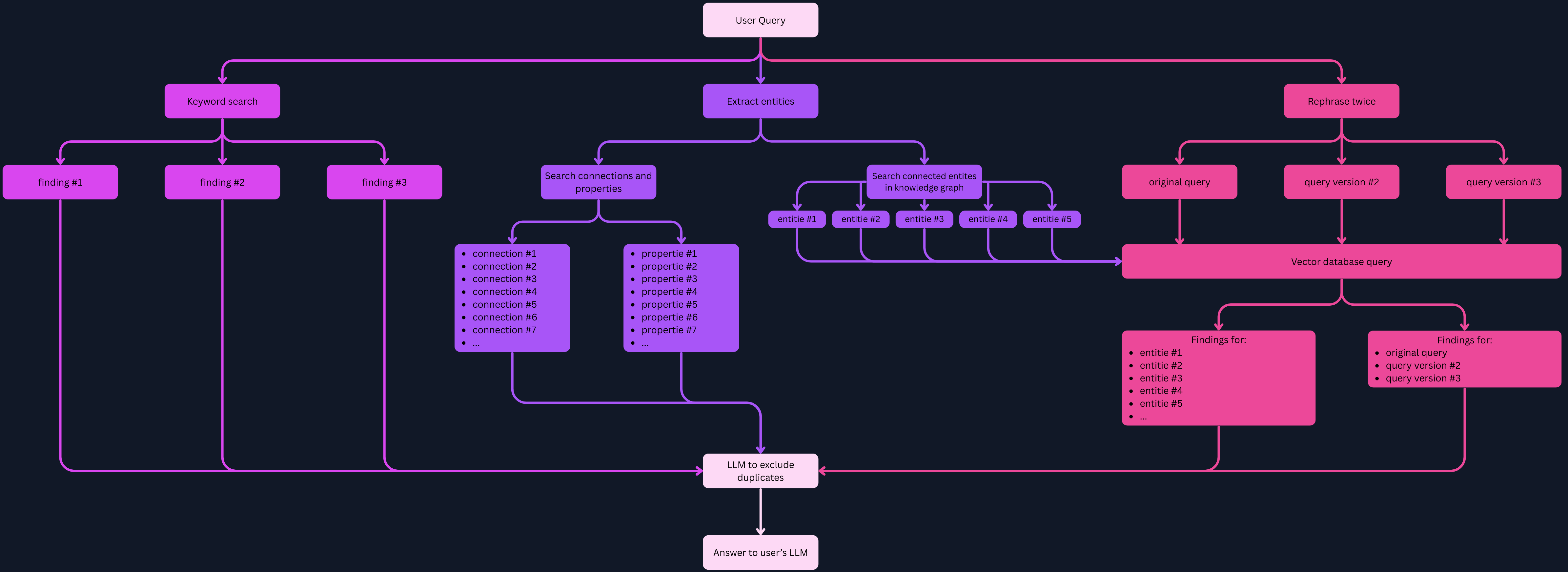

How our RAG pipeline works

The diagram above shows the flow of how your query is processed inside our Retrieval-Augmented Generation (RAG) system. We combine three complementary strategies to ensure the most relevant and reliable results:

- Keyword search for straightforward matches in your data.

- Entity extraction & knowledge graph lookups to understand relationships and properties.

- Semantic search in a vector database with multiple query reformulations to capture meaning, not just words.

All findings are gathered, de-duplicated by the LLM, and returned as a single, precise answer. This multi-path approach gives your model both breadth (wide coverage of possible sources) and depth (rich, contextual information).

How Nexora Context works

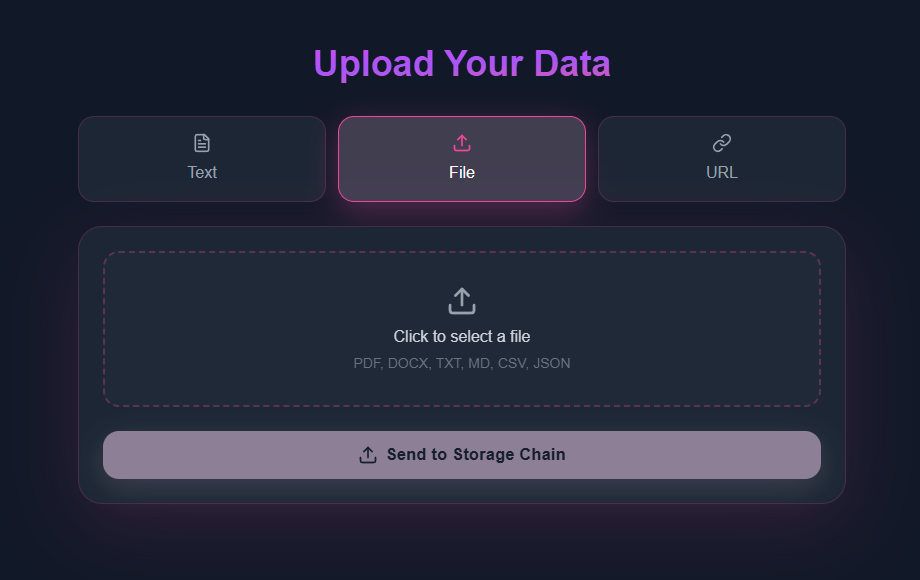

- 1Connect sourcesUpload Files, URL's or just plain text.

- 2Index & enrichWe extract, chunk, embed and classify with metadata, respecting ACLs.

- 3Ask via MCPYour LLM calls the `context.search` tool; we return concise context.

- 4Answer with confidenceYour model composes a grounded reply.

Pricing

Loading plans...

Give your LLM perfect context

Get accurate AI answers from your documents. Works with any MCP-enabled agent.